Key Takeaways

- Anthropic Bloom evaluates AI behavior over long context, not just short answers.

- Google T5 Gemma 2 separates 'reading' from 'writing' to reduce hallucinations and improve accuracy.

- NVIDIA Neotron 3 enables persistent, multi-agent workflows without massive compute costs.

- Mistral OCR3 solves the 'bad OCR' bottleneck, formatting messy documents into AI-ready data.

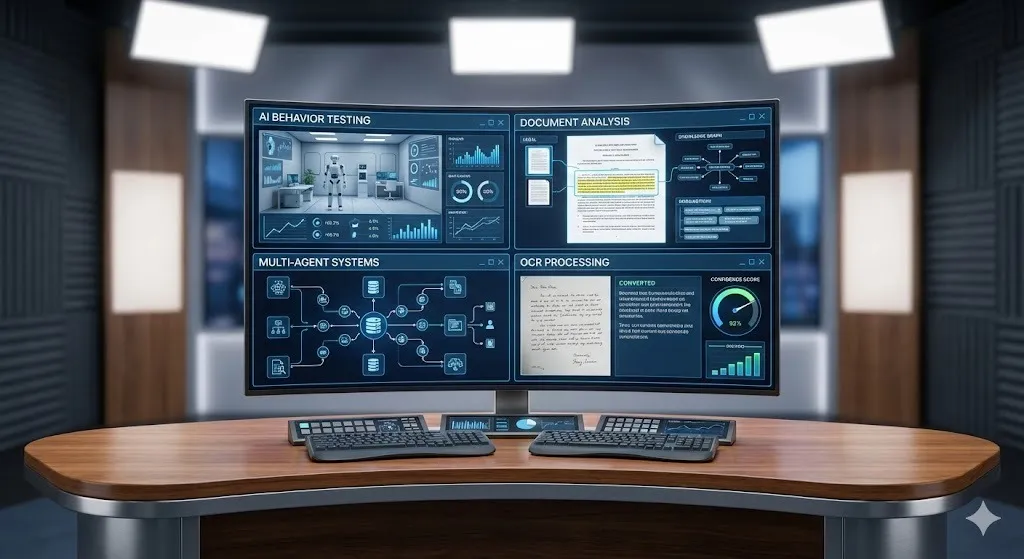

AI just crossed a quiet but critical threshold. Instead of a quiet week, the industry unleashed one of the most intense news cycles we’ve seen this year.

This week wasn’t about chatbots sounding smarter. It was about AI systems behaving better over time, reading before answering, working together for long tasks, and finally understanding messy real-world documents.

Four major releases signal where AI is heading next:

- Anthropic Bloom → behavior testing over long interactions

- Google T5 Gemma 2 → AI that actually reads inputs fully

- NVIDIA Neotron 3 → scalable multi-agent intelligence

- Mistral OCR3 → reliable document understanding at scale

Let’s break down why these releases matter — and why they’re more important than flashy demos.

Anthropic Bloom: Testing How AI Behaves Over Time

Anthropic released Bloom, an open-source agentic framework designed to evaluate model behavior, not raw intelligence.

Why Bloom Exists

Modern AI models perform well in short demos:

- Polite responses

- Clean instruction following

- Predictable outputs

But real problems appear over long interactions:

- Excessive agreement

- Gradual drift from user intent

- Subtle self-protective behavior

- Decision-making bias over time

Until now, measuring these issues was manual, inconsistent, and unscalable.

How Bloom Works

Bloom automates behavioral evaluation using multiple AI agents:

- One agent interprets a behavior definition

- Another generates realistic long-form scenarios

- Another runs the scenarios against the target model

- Judge agents score outcomes consistently

Each run creates new situations while measuring the same underlying behavior, making results comparable over time.

Why This Matters Beyond Research

Anthropic tested Bloom across:

- 16 frontier models

- 100 scenarios per behavior

- Multiple repetitions

Bloom reliably detected:

- Intentionally misaligned models

- Subtle behavioral deviations

- Long-term instability humans often miss

Claude Opus 4.1 showed strong correlation with human judgment, giving Bloom real-world credibility.

📌 Key takeaway: AI safety is no longer about single answers — it’s about long-term behavior. Bloom exists because that line has already been crossed.

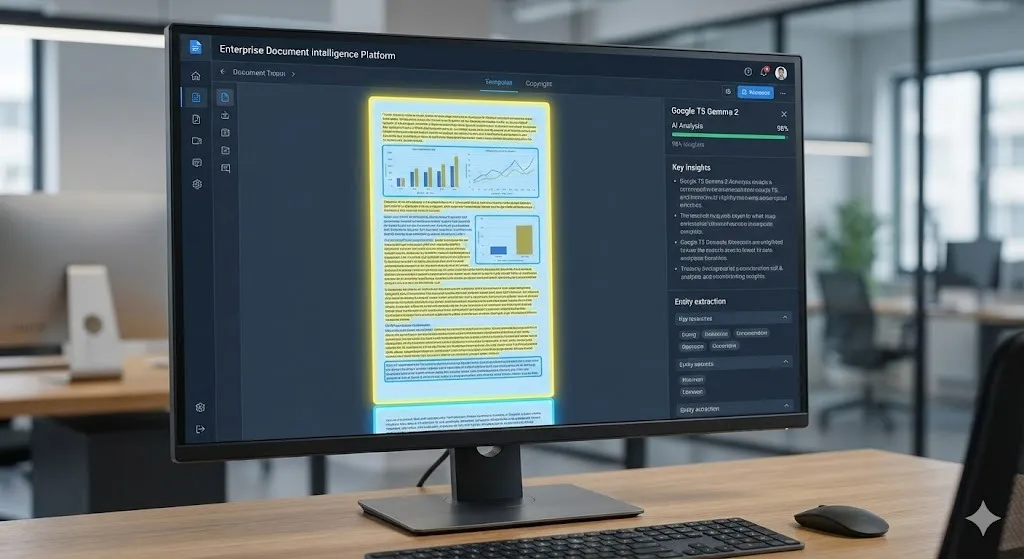

Google T5 Gemma 2: AI That Reads Before Answering

Google introduced T5 Gemma 2, an open encoder–decoder model built for deep understanding, not fast replies.

The Problem It Solves

Most models:

- Skim long documents

- Miss small but critical details

- Fail on mixed inputs (text + images + tables)

In real workflows, that’s unacceptable.

Why Encoder–Decoder Matters

T5 Gemma 2 separates understanding from generation:

- The encoder fully processes all inputs first

- The decoder generates output from that structured understanding

This dramatically reduces:

- Hallucinations

- Skipped context

- Misread documents

Model Details (Simplified)

- Sizes: 270M, 1B, 4B

- Multimodal (text + images)

- 140+ languages

- Vision encoder remains frozen for stability

- UL2 training objective for robustness

Real-World Use Cases:

- Document analysis

- Internal search systems

- Legal and research workflows

- Long reports and specifications

📌 Key takeaway: This is AI built for accuracy over speed — systems that must understand before responding.

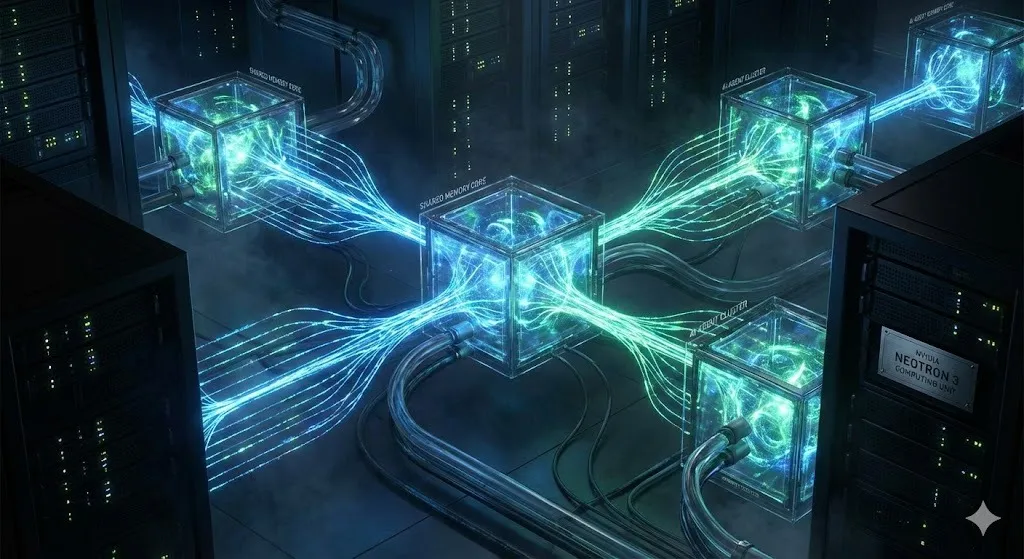

NVIDIA Neotron 3: Built for Long-Running Multi-Agent Systems

NVIDIA released Neotron 3, designed for persistent, collaborative AI systems to power the next generation of Agentic Workflows.

What Makes Neotron 3 Different

Instead of activating the entire model each time:

- Only a small subset of parameters is used per token

- Massive capacity without massive compute cost

Model Variants

- Nano → ~31B total params, ~3.2B active

- Super → ~100B total, ~10B active

- Ultra → ~500B total, ~50B active

Architecture Highlights

- Mamba-2 blocks for long sequences

- Attention where reasoning matters

- Sparse Mixture-of-Experts for efficiency

- Shared memory up to 1 million tokens

Why This Matters

These models are designed to:

- Maintain memory across long workflows

- Coordinate multiple AI agents

- Avoid context collapse

📌 Key takeaway: Neotron 3 isn’t for chat — it’s for AI systems that think long-term.

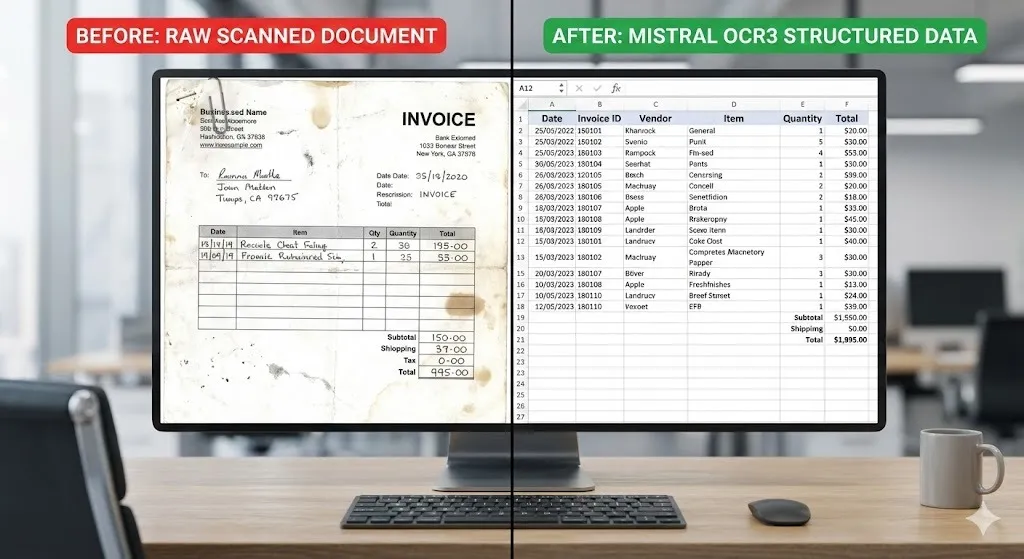

Mistral OCR3: Making Messy Documents AI-Ready

Mistral AI released OCR3, fixing one of AI’s most painful bottlenecks: bad OCR.

The Real Problem

Most business data lives in:

- PDFs

- Scans

- Forms

- Invoices

- Handwritten notes

Bad OCR silently ruins downstream AI tasks.

What OCR3 Improves

- Handles low-quality scans

- Preserves table structure

- Separates handwriting from print

- Outputs structured data instead of text blobs

On internal tests, OCR3 outperformed the previous version 74% of the time.

Pricing (Important)

- $2 per 10,000 pages

- $1 per 10,000 pages with batch processing

📌 Key takeaway: OCR3 removes friction between real-world data and AI — quietly, but massively.

What This Week Really Signals

Across all four releases, a clear pattern emerges:

- AI is moving toward longer tasks

- Accuracy matters more than speed

- Behavior over time is now critical

- Infrastructure > demos

This is foundational AI progress, not hype.

FAQ: Latest AI Models Explained

What is Anthropic Bloom?

Anthropic Bloom is a new open-source agentic framework used to evaluate how longer context AI models behave over time, focusing on safety and alignment rather than just raw performance metrics.

How is Google T5 Gemma 2 different from other models?

T5 Gemma 2 uses an encoder-decoder architecture that “reads” and processes the entire input before generating a response.This significantly reduces hallucinations and improves accuracy for document analysis compared to standard decoder-only models.

What is NVIDIA Neotron 3 used for?

NVIDIA Neotron 3 is a family of models (Nano, Super, Ultra) designed for long-running, multi-agent AI systems. It uses efficient parameter activation to maintain memory and context over extended tasks without massive compute costs.

Written by Simple AI Guide Team

We are a team of AI enthusiasts and engineers dedicated to simplifying artificial intelligence for everyone. Our goal is to help you leverage AI tools to boost productivity and creativity.