1.7 The Human Brain... in Code? Demystifying Neural Networks

You’ve probably heard the term “Neural Network” thrown around in conversations about AI, often followed by a vague wave of the hand and a mutter about “brain-like computing.” It sounds sci-fi, almost magical. But here’s the secret: at its core, a neural network is just a really, really clever way of doing math.

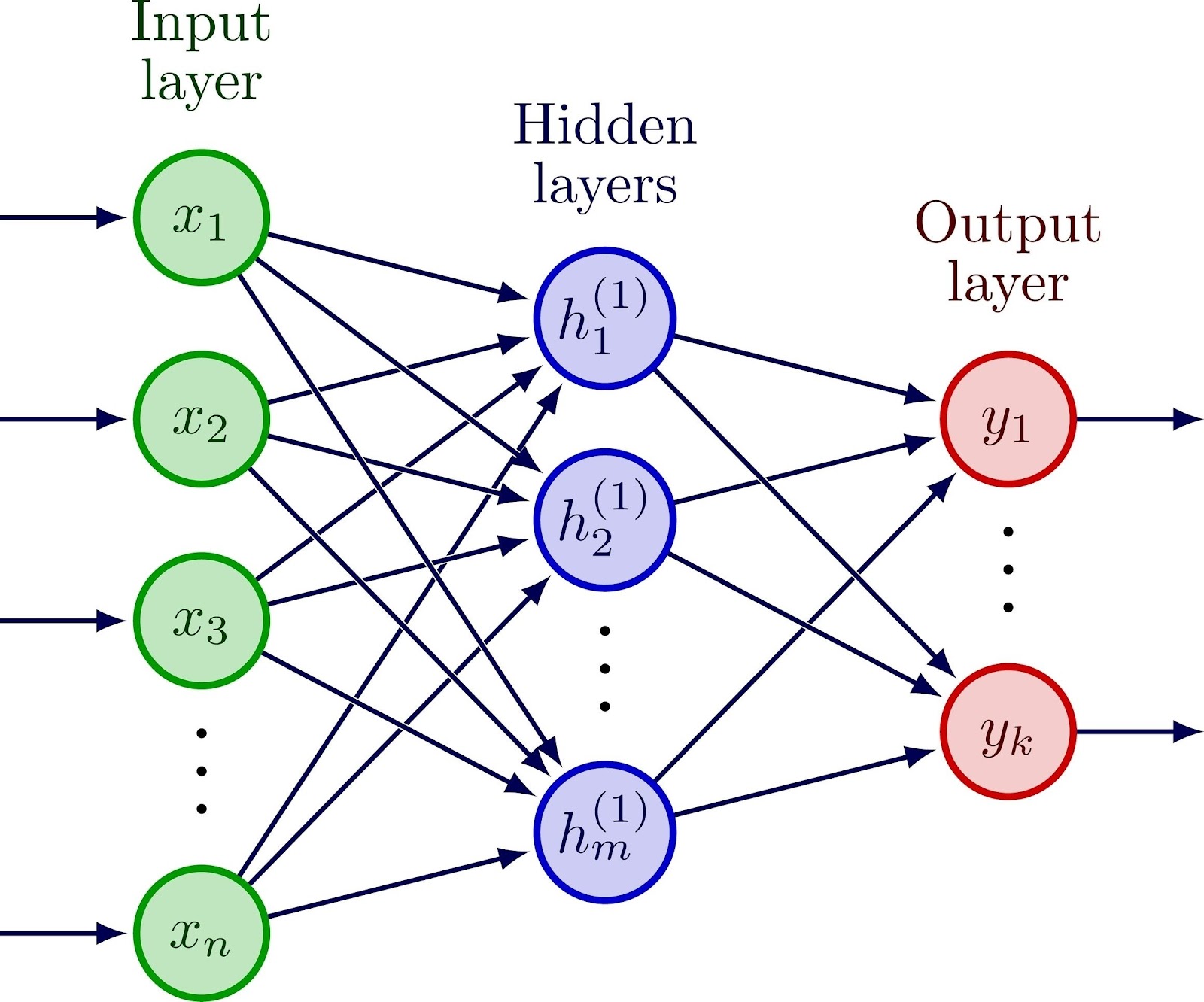

A Neural Network is an algorithm architecture inspired by the biological structure of the human brain. Just as our brains are made up of billions of interconnected neurons, these artificial networks are composed of layers of “nodes” (artificial neurons) that work together to solve a problem.

It is the fundamental building block of modern Deep Learning.

The Anatomy of a Neural Network

To understand how a neural network works, you need to know its three main parts. Think of it like a factory assembly line: materials come in, get processed at various stations, and a finished product comes out.

- The Input Layer (The Entry Door): This is where the data enters the network. If you are trying to teach an AI to recognize a cat, the input layer receives the raw pixel values of the image. It doesn’t do any thinking; it just passes the information along.

- The Hidden Layers (The “Magic” Middle): This is where the real work happens. These layers are placed between the input and output. A deep learning model can have dozens, or even hundreds, of these layers.

In these layers, the network performs calculations to identify features. The first hidden layer might detect simple edges. The next might combine those edges to find shapes (like a circle for an eye). Subsequent layers combine shapes to find complex features (like a cat’s face).

- The Output Layer (The Final Answer): This is the final layer that provides the prediction. For our cat example, the output layer might have two nodes: one for “Cat” and one for “Not Cat.” The node with the higher numerical score is the network’s final answer.

The “Neuron” in Action

So, what is an artificial “neuron” actually doing? It’s far simpler than a biological one.

Each node in a hidden layer receives inputs from the previous layer. It takes each input, multiplies it by a specific “weight” (a number indicating how important that input is), adds them all together, and then adds a “bias” value.

Finally, it passes this sum through an Activation Function. This function is like a gatekeeper that decides if the neuron should be “activated” (send a strong signal to the next layer) or remain dormant. It adds non-linearity to the network, which is crucial for learning complex patterns.

How Does It Actually Learn?

A neural network doesn’t know anything when it’s first created. It learns through a process of trial and error.

- Forward Propagation (The Guess): Data flows forward through the network, from input to output, and the network makes an initial, likely incorrect, prediction.

- Backpropagation (The Correction): The network compares its prediction to the correct answer and calculates the “error.” This information is then sent backward through the network.

- Gradient Descent (The Adjustment): Using the error from backpropagation, the network slightly adjusts the weights of the connections between neurons. The goal is to change the weights in a way that makes the error smaller next time.

This cycle is repeated millions of times across a massive dataset. Slowly but surely, the network tunes its trillions of weights until it gets really, really good at its job.

This is a high-level overview. The mathematical magic of backpropagation and gradient descent is a deep topic that we will explore fully in Module 7 (Deep Learning). For now, just remember: a neural network is a powerful, brain-inspired machine that learns from its own mistakes.